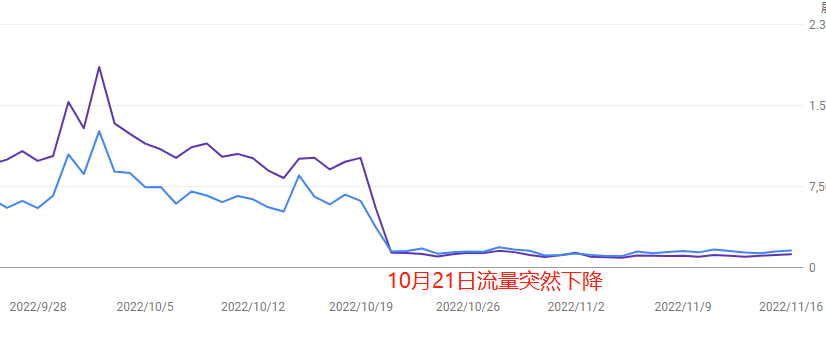

Recently, when website traffic suddenly spikes and then crashes, many site owners immediately think: “Did Google update its algorithm again?”

But in reality, dramatic traffic swings are usually the result of multiple factors working together—it might be the “collateral damage” of an algorithm tweak, or it could be due to technical issues on your own site, declining content quality catching up with you, or even targeted actions from competitors.

This article takes a practical approach, using a step-by-step self-check process to quickly figure out whether your site got hit by an algorithm change or if the problem is coming from within—helping you avoid rash redesigns that could make things even worse.

Table of Contens

ToggleFirst, check if a Google algorithm update is to blame

When traffic suddenly drops, webmasters often reflexively suspect that Google updated its algorithm again.

But blaming the algorithm blindly could cause you to overlook more direct, critical issues.

1. Check Google’s official update calendar (link to Search Central Blog)

- How to do it: Go to the official Google Search Central Blog (here’s the link), and click on the “What’s new in Google Search” section. Look through the updates from the past 3 months. Core algorithm updates are usually labeled as “Core Update,” while product-related updates (like review systems or EEAT changes) are explained separately.

- Heads-up: Smaller updates (like link spam cleanups) may not be announced publicly. You’ll need to cross-check using tool-based fluctuation data.

- Example: During the core update in August 2023, many medical and financial sites saw traffic drop 30%-50% due to lack of content authority.

2. Use SEO tools to track fluctuation timing (recommended free tools: SEMrush Sensor, RankRanger)

- Pro tip: In SEMrush Sensor, check the “volatility score” from about 3 days before your traffic drop (a score above 7 usually signals something unusual). For example, if a tool site’s traffic crashed 40% on Sept 5, and SEMrush showed a volatility score of 8.2 on Sept 3, it likely lines up with a spammy link crackdown that wasn’t publicly disclosed.

- Cross-check: Also look at the “performance” graph in Google Search Console. If the drop in organic clicks aligns with high volatility in the tools, the odds of algorithm impact are higher.

3. Compare competitor traffic (using SimilarWeb)

- Steps: Go to SimilarWeb, plug in three competitor domains, and check the “Organic Traffic” trend chart. If their traffic dropped around the same time (e.g., all down by 20% or more), it’s probably an industry-wide algorithm update. If only your site dropped, you should first look for internal issues.

- Counterintuitive insight: In some niche industries (like web hosting review sites), top-ranking sites might actually get a boost from the algorithm and go up while everyone else drops. So make sure you analyze the full TOP 10 range of competitors.

Urgently check for technical breakdowns

Technical failures usually have a recovery window of just 48 hours—delays can lead to long-term ranking loss.

1. Check server status (example uses Pingdom to detect downtime)

- How to do it: Log into Pingdom or UptimeRobot (free version supports 2-minute intervals), and check if there was any “Downtime” (marked in red) during the traffic drop period. For example, one e-commerce site crashed during a big sale and was offline for 3 hours, causing a 60% loss in organic traffic.

- Heads-up: Some CDN providers (like Cloudflare) may have outages that only affect certain regions. Use Geopeeker to simulate access from different global locations.

- Emergency fix: If downtime is confirmed, immediately contact your hosting provider to upgrade capacity or migrate servers. Then submit a “dead URL check” request in GSC to speed up reindexing.

2. Check page loading speed (includes PageSpeed Insights screenshots)

- Key metrics: Use Google PageSpeed Insights to analyze the affected pages. Focus on “LCP” (Largest Contentful Paint)—it should be under 2.5 seconds. If your mobile score is below 50, Google might downgrade your page experience score.

- Example: A blog site didn’t compress a huge homepage image (3MB!), causing mobile load times to hit 8 seconds. Google dropped the ranking from page 2 to beyond the top 100.

- Top fixes: Compress images (TinyPNG), lazy-load off-screen content (LazyLoad plugin), and clean up unused CSS/JS using tools like PurgeCSS.

3. Track crawl errors (via Google Search Console Coverage Report)

- Where to look: In GSC → Coverage → “Errors” tab, filter for critical issues like “Submitted URL blocked by robots.txt” or “Server errors (5xx)” around the time of the traffic drop. A 200%+ spike in crawl errors in a single day can tank your index count.

- Gotcha: Some WordPress plugin updates might accidentally change your robots.txt file (e.g., suddenly blocking the /admin path), and end up stopping Google from crawling your entire site.

- Quick fix: Use the “URL Inspection Tool” in GSC to request urgent re-crawling, and after fixing the problem, submit a “Validate Fix” request.

3. Crawl Error Stats (Google Search Console Coverage Report)

- Key Data Spotting: Go to GSC → Coverage → “Error” tab, and filter for fatal issues like “Submitted URL blocked by robots.txt” or “Server errors (5xx)” around the dates of the traffic drop. A spike of 200%+ in crawl errors on a single day might lead to a sharp drop in indexed pages.

- Counterintuitive Trap: Some WordPress plugins accidentally modify the robots.txt file after updates (e.g., suddenly blocking the /admin path), which can end up blocking the entire site from being crawled.

- Quick Fix: Use the “URL Inspection Tool” in GSC to request urgent recrawling, and after fixing the issue, submit a “Validate Fix” request.

Algorithm “Payback” for Low-Quality Content

One of the trickiest causes of a traffic crash often hides in the content itself—you might have gotten some short-term traffic with low-quality content, but Google has gotten much better at “delayed punishment” using models like BERT and MUM to detect thin, AI-generated, or over-optimized pages.

This kind of “payback” usually hits 1–2 weeks after an algorithm update and can take months to recover from.

1. Check How Much AI-Generated/Scraped Content You Have (Manually audit at least 20% of your pages)

- Detection Tools: Use Originality.ai or GPTZero to scan pages with high bounce rates. Look for paragraphs that are “too smooth but lack depth” (like formulaic five-paragraph structures or overuse of transition words).

- Case Study: A tech blog used ChatGPT to mass-produce 50 “How to fix XXX error” guides. Rankings were steady at first, but after Google’s October 2023 spam update, their traffic was cut in half.

- Emergency Move: For pages where AI content makes up more than 30%, immediately add original elements like real-world case studies or user interviews. Replace any wall of text over 300 words with videos or charts.

2. Bounce Rate of New Traffic Pages (Check with GA4 Behavior Reports)

- Where to Look: In GA4, go to “Traffic Acquisition → Pages and Screens” and filter for landing pages that appeared in the 30 days before the traffic drop. If the bounce rate is 15% higher than your site average (e.g., site avg. is 50%, page shows 65%), Google may be downranking those pages.

- Surprising Insight: Some clickbaity posts get high CTRs but have users leaving in under 10 seconds. The algorithm may flag them as misleading.

- Fix Tips: Add “Table of Contents” anchor links or pop-ups for “Related Solutions” to keep users engaged longer on low-quality pages.

3. Sudden Influx of Backlinks – Check Their Quality (Using Ahrefs Spam Score)

- Risk Signals: Use Ahrefs to check for new backlinks from the 2 months before your traffic drop. If the “Spam Score” exceeds 40 (out of 100) or if many links are from the same C-class IP (e.g., 192.168.1.XX), it may have triggered a penalty.

- High-Risk Example: A travel site bought 50 forum links with anchor text about “Cambodian casinos” to boost rankings, which led to the entire site being flagged as an “untrustworthy source.”

- Damage Control: Submit a disavow file in Google Search Console and remove any on-site content closely related to those spammy backlinks.

Targeted Actions by Competitors

A traffic drop isn’t always your fault—it could be your competitors making silent moves behind the scenes. They might be snatching your featured snippets, rapidly updating related content, or even running aggressive ads targeting your keywords.

Google’s organic ranking is a zero-sum game—if your competitor suddenly steps up, your traffic can get “strategically dismantled.”

1. Did a Competitor Grab Your Featured Snippet? (Manually Search Core Keywords)

- How to Check: In Google’s Incognito Mode, search your top 3 traffic keywords. If a competitor’s page appears in the “Featured Snippet” (gray box) or “People also ask” section, and your page has dropped to position 2 or lower, that’s a classic sign of a traffic hijack.

- Example: A tools site originally held the snippet for “PDF converter,” but a competitor used a step-by-step list (like “1. Upload file → 2. Select format → 3. Click download”) with comparison tables and stole 35% of clicks within 7 days.

- Counter Strategy: Use AnswerThePublic to find long-tail questions, then add a “Q&A” section to your page with numbered steps and subheadings (H2/H3) for structured content.

2. Competitor Content Update Frequency (Check with Screaming Frog)

- How to Track: Enter the competitor’s domain in Screaming Frog and filter by “Last Modified” to see which directories were recently updated. If they’re suddenly publishing 5 new posts a day under “/blog/” on topics overlapping with your top pages, that’s likely a content attack.

- Heads-Up: Some competitors fake updates by changing the publish date without changing the content. Use Diffchecker to compare historical snapshots and confirm real edits.

- Action Plan: For topics being targeted, publish in-depth content like industry research, tutorial videos, or comparison tables to stand out with richer material.

3. Are They Running Ads to Steal Your Clicks? (Check SpyFu Ad History)

- What to Use: Go to SpyFu, enter the competitor’s domain, and check the “Ad History” tab to see what Google Ads keywords they’ve been targeting. If they started bidding on your brand name or key long-tail terms during your traffic dip (like “YourBrand alternative”), it’s a sign of ad hijacking.

- Evidence Collection: Use PPC ad preview tools (like SEMrush Advertising Research) to screenshot the ad copy. If it says things like “Cheaper than XXX (your brand),” you can report it to Google for violating the Comparative Advertising Policy.

- Urgent Response: Add a “brand match exclusion” for those keywords in Google Ads and put an “Official Certification” badge on your landing page to boost credibility.

Recovering your traffic usually takes 1–3 months. Submitting too many reconsideration requests might make it look like manual manipulation and backfire.